How to Give AI Full Context of Your Codebase

Learn how to enhance AI coding assistants by providing them with full context of your codebase, improving efficiency and accuracy.

Quick navigation

AI coding assistants often struggle without full access to your codebase, leading to repetitive explanations and inconsistent suggestions. Providing full project context can transform these tools into effective collaborators by enabling them to understand your architecture, dependencies, and coding patterns. Here's how to prepare your codebase for AI:

- Organize Your Project: Use clear folder hierarchies, descriptive file names, and modular structures to make navigation easier.

- Document Thoroughly: Include README files, inline comments, architecture diagrams, and test documentation to guide the AI.

- Add Metadata: Use dependency graphs, type definitions, and configuration files to provide structured insights.

- Set Up Your IDE: Configure AI tools to index your codebase, exclude irrelevant files, and test their retrieval accuracy.

- Leverage Embeddings: Use embeddings and context windows for efficient code retrieval, breaking down files into smaller units like functions or classes.

- Structure Tasks: Create reusable, machine-readable task context files (e.g., YAML or JSON) to define goals, scope, and constraints.

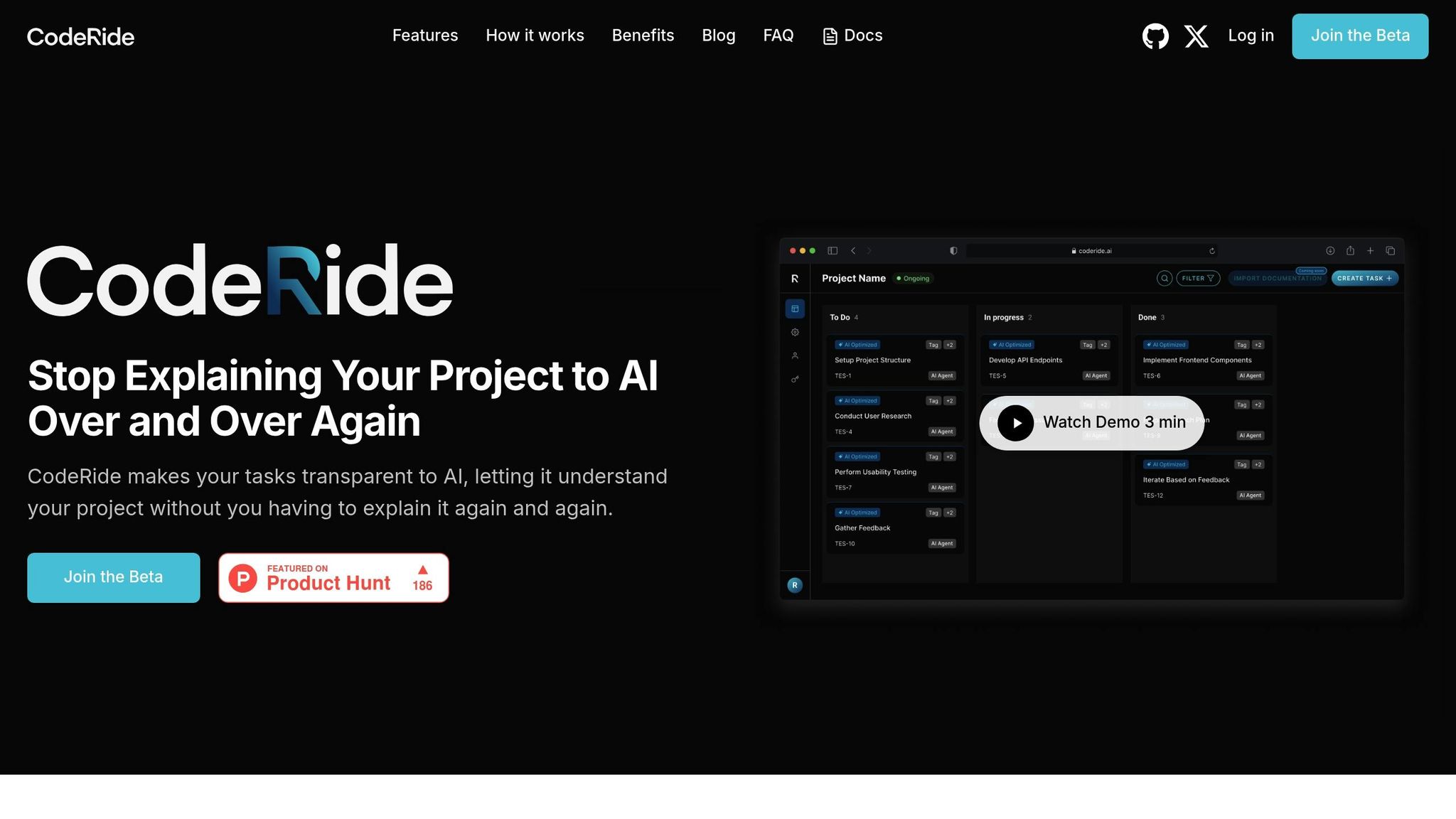

Tools like CodeRide simplify this process by automatically analyzing your project, learning your coding style, and enabling autonomous workflows. With these steps, you can save time, reduce errors, and improve the quality of AI-generated code.

Codebase Context -Getting Started

Preparing Your Codebase for AI Context

To make AI tools more effective in understanding your codebase, you need to organize and document your project in a way that ensures quick and accurate retrieval of information. Tackling issues like context amnesia starts with these practical steps to prepare your codebase for seamless AI integration.

Standardize Project Organization

A clear and consistent structure is the backbone of an AI-friendly codebase. Organized folder hierarchies and well-defined module boundaries make it easier for AI tools to navigate your project without stumbling over scattered or redundant files.

Modules should follow the principle of single responsibility. Each component should focus on one specific task, which helps AI understand its purpose and role within the system. For instance, an authentication module should exclusively handle user authentication, while a database module should focus solely on data access. This clarity allows AI to pinpoint where modifications or improvements are needed.

Descriptive naming conventions are another key element. Instead of generic names like utils.js, opt for more specific ones like dateFormatters.js to clearly indicate a file's purpose. This makes it easier for AI to grasp the functionality of different components.

To further streamline organization, group related functionalities into modular structures. For example, all user-related components - models, controllers, and services - should reside within a dedicated "user" module. This approach prevents AI from wasting time searching through unrelated folders and ensures it can quickly locate the code it needs.

Maintain Clear Documentation

Once your codebase is organized, documentation becomes the guide that helps AI understand the logic behind your project. Start with README files that outline your project's core purpose, functionality, and how its components interact.

Adding architecture diagrams can provide a visual aid. Tools like Mermaid can create flowcharts that map out how data flows through your application, offering AI a clearer picture of your system's structure. These text-based diagrams are especially useful because they’re easily interpretable by AI.

Inline comments and API documentation are equally important. Use comments to explain the reasoning behind specific pieces of code, detailing expected inputs, outputs, and behaviors. This helps AI suggest accurate function calls and identify potential integration challenges.

Don't overlook the importance of test documentation. Up-to-date test cases act as executable blueprints, showing AI the expected behavior of your code and ensuring AI-generated suggestions align with those expectations.

Generate Machine-Readable Metadata

Machine-readable metadata gives AI structured insights into your codebase, allowing it to process information more efficiently. Dependency graphs are a great example - they show how various components interact, helping AI predict the ripple effects of any changes.

If you're working with dynamically typed languages, consider adding type definitions or JSDoc comments to clarify data structures and function signatures. This additional context allows AI to generate more precise code and catch potential type errors.

For APIs, maintain OpenAPI specifications or GraphQL schemas that detail endpoints, request/response formats, and validation rules. These schemas act as contracts that AI can reference when generating or modifying API-related code.

Your configuration files - like package.json, environment variables, or build settings - should also be well-documented. This ensures AI understands your project's dependencies and deployment requirements.

Finally, consider creating a project manifest file. This document should summarize key aspects of your codebase, such as the technologies used, architectural patterns, coding standards, and any special conventions. By providing a single source of truth, you give AI all the context it needs to make informed and efficient code suggestions.

Setting Up AI Context in Your IDE

To get the most out of AI tools in your coding environment, it's crucial to configure your IDE so the AI can access and understand your code effectively. This setup ensures the AI can provide meaningful and relevant assistance. Below, we’ll walk through integrating AI tools, fine-tuning their context scoping, and testing the setup to make sure everything works smoothly.

Configure IDE Integration with AI Tools

AI coding assistants can seamlessly integrate with popular IDEs like VSCode or JetBrains, but proper configuration goes beyond just installing extensions. To maximize their potential, you'll need to ensure they can index your code effectively.

Most AI tools work by creating embeddings for each file in your project. These embeddings are stored locally, keeping your code private while enabling quick lookups. Start by configuring ignore files to exclude unnecessary parts of your project, such as large binary files, generated code, or dependency folders. Many tools support custom ignore files like .cursorignore or .continueignore to handle this.

For teams using multi-root workspaces, many AI tools automatically detect and index all codebases within the workspace. This is especially useful when working on microservices or monorepos, where multiple repositories are involved.

Scoping and Filtering Context

To make AI suggestions more relevant, you’ll need to strategically define the scope of context it uses. Most tools allow you to specify what parts of your project the AI should focus on through context providers.

Commands like @Codebase, @Folder, or @workspace help you narrow down the scope. For instance, using @Folder with a specific directory name ensures the AI focuses only on files within that folder, making its suggestions more precise.

Some tools also let you adjust parameters for how much code the AI retrieves and includes in its context. Advanced features like re-ranking algorithms further refine this process, prioritizing the most relevant code snippets based on your current task. Once you’ve set the scope, test its effectiveness to ensure the AI is pulling the right information.

Testing AI Integration

Testing is essential to confirm that the AI is correctly retrieving and using context from your project. A good starting point is to check whether the AI can access files across modules and understand recent changes.

Here are some example workflows you can use to validate the setup:

- Ask the AI to refactor a function that’s used in multiple files.

- Request help implementing a feature that spans several modules.

Pay attention to how well the AI handles these tasks. If it provides accurate, context-aware suggestions, your setup is likely on point. On the other hand, if you notice gaps - like missing dependencies or overlooked constraints - it could mean some files aren’t being indexed properly or that the context scope needs tweaking.

You should also test the AI’s understanding of historical context, such as merged pull requests or recent commits. Tools that can index this kind of historical data tend to offer more informed suggestions, reflecting your project’s latest changes and patterns.

Using Embeddings and Context Windows for Code Retrieval

Once you've set up your IDE to provide context, you can take your AI assistance to the next level with advanced indexing techniques. AI tools can't process an entire codebase in one go, so technologies like embeddings and context windows step in to bridge the gap. These tools help the AI zero in on the most relevant code snippets, ensuring precise assistance without overloading the system.

Indexing Your Codebase with Embeddings

Embeddings essentially convert your code into mathematical representations that capture its meaning. Think of them as unique "fingerprints" for your code that allow the AI to understand semantic relationships, rather than relying on basic keyword matching.

Many AI coding tools automatically generate embeddings for your project when you open it. These embeddings can include not just your source code but also documentation, configuration files, and even commit messages. The process happens in the background, and the time it takes depends on the size of your project.

A critical part of embedding is granular segmentation. Instead of creating one embedding for an entire file, advanced tools break it down into smaller units - like functions, classes, or logical blocks of code. This way, when you're working on a specific function, the AI can retrieve related pieces of code from across your project without pulling in irrelevant content from the same file.

To store these embeddings locally, many tools use vector databases. Libraries like FAISS or Chroma are popular for managing this storage, offering quick searches across thousands of files while keeping your code within your development environment.

Structuring Indices for Better Retrieval

How you organize your embedding indices can have a big impact on the AI's performance. Instead of treating the entire codebase as one massive index, you can structure indices to reflect your project's layout and workflow.

- Module-based indexing: For large projects, creating separate indices for each major module or service is particularly effective. For example, if you're working on authentication, the AI will first search within authentication-related modules before expanding outward.

- File-type grouping: Another strategy is to organize indices by file type. Separate source code, tests, documentation, and configuration files into their own indices. This prevents the AI from suggesting test code when you're looking for production logic, or vice versa.

- Temporal indexing: By giving more weight to recently modified files, the AI can focus on the most relevant parts of your codebase. Recent updates often reflect current priorities, and tools typically manage this weighting automatically. Some even let you tweak parameters to better fit your team's workflow.

Many setups use composite indices that combine these strategies. For instance, you might prioritize recent changes in the current module, then expand to related modules, and finally include patterns from the broader codebase. This layered approach improves both precision and efficiency.

Testing How Well Retrieval Works

To make sure your indexing strategies are working, it's important to test how effectively the AI retrieves context. The goal is to ensure that the system consistently pulls the right information for complex tasks without getting sidetracked by irrelevant matches.

- Cross-module dependency testing: Check how well the AI handles interconnected code. For example, ask it to modify a function that's used across multiple modules or assist with features requiring coordination between services.

- Historical context validation: See if the AI can access your project's history. Ask it to explain recent architectural changes or ensure consistency with current coding patterns. The AI should surface relevant commit messages, pull request discussions, and recently edited files.

- Precision testing: If you request help with a very specific change, does the AI stay focused or bring in loosely related content? If it retrieves too much irrelevant information, you may need to adjust your indexing granularity or filtering settings.

- Performance monitoring: Keep an eye on retrieval speed and how the AI utilizes context windows. Many tools provide metrics to help you fine-tune your setup, ensuring the system scales smoothly as your codebase grows.

sbb-itb-4fc06a1

Structuring Task Context for Consistent AI Results

Once you've optimized code retrieval using embeddings and context windows, the next step is to focus on structuring task context. Clear and structured instructions are key to ensuring the AI produces consistent and reliable results. This approach works hand-in-hand with your streamlined code retrieval process, helping the AI understand and execute your directives effectively.

Creating Task Context Files

Task context files are essential for defining the goal, scope, and boundaries of a task. These files should include key elements that guide the AI through specific code changes:

- Intent: Clarify the business goal or technical objective.

- Scope: Define the parts of the codebase to modify and those to leave untouched.

- Impacted Modules: List the files, functions, or services that need updates.

- Constraints: Specify technical limitations, performance benchmarks, or architectural requirements.

- Acceptance Criteria: Outline measurable conditions for task completion.

For instance, instead of vaguely asking the AI to "implement rate limiting", you could specify: "Add JWT-based rate limiting for API endpoints, focusing on middleware and the user controller, while ensuring response times stay under 100ms."

To make these files machine-readable, use formats like YAML or JSON. Many teams organize these files in a dedicated .ai-context directory within their repository, making them easy to version-control and access.

Reusing Task Context for Automation

Structured task context files aren't just one-off tools - they can streamline repetitive work and automate various development tasks. By reusing these files, you can automate tasks like creating pull requests, generating test cases, or implementing recurring features.

For example, if your task context includes acceptance criteria, the AI can use those to generate automated test cases that cover edge scenarios and integration points. This ensures functionality remains intact even as the code evolves.

These files can also act as templates for similar tasks across projects. A context file for implementing API rate limiting could be adapted for different endpoints by simply adjusting the scope and impacted modules while retaining the core intent and constraints.

To maximize efficiency, maintain a library of context files organized by feature type, architectural design, or business function. Teams often create standardized templates for common tasks like adding API endpoints, handling database migrations, or integrating third-party tools. This approach reduces risk and breaks tasks into manageable, reusable components.

Scoping Tasks to Minimize Risk

Breaking down large tasks into smaller, well-defined pieces helps the AI deliver more reliable results. Smaller tasks are easier for the AI to grasp, minimizing the chance of unintended side effects in your codebase.

For example, instead of asking the AI to "refactor the entire user management system", you could divide the work into smaller tasks: extract user validation logic into its own service, update the user controller to use the new service, migrate existing tests, and update the documentation. Each of these smaller tasks is self-contained, making it easier to implement and test independently.

Smaller tasks also use fewer tokens, which helps the AI stay focused on the relevant context. By scoping tasks to specific modules or functions, you reduce the risk of the AI being overwhelmed by unrelated code.

To define the right scope, map out dependencies in the code. If a function interacts with multiple modules, you might need to expand the scope to include those interactions or split the work into phases to handle each dependency separately. Start by outlining the broader task, then use the AI to identify specific components that require changes. From there, create focused task contexts for each piece, ensuring the AI fully understands one component before moving to the next.

CodeRide: Full-Project Context and Automated Workflows

CodeRide tackles the challenge of context amnesia in AI-assisted development by ensuring the AI always has a complete understanding of your codebase. No need for manual embeddings or external context files - CodeRide handles it all automatically.

CodeRide's Context Features

CodeRide dives deep into your entire codebase, documentation, and configurations to provide the AI with a full understanding of your project. This includes architecture, coding patterns, and dependencies.

It doesn’t stop there. CodeRide tracks relationships and dependencies within your code, so when you ask the AI to modify a function, it already knows which other components rely on that function. This allows it to suggest updates across the board, ensuring nothing is overlooked.

The system also learns from your existing coding style, including variable naming conventions and architectural preferences. This guarantees that any new code it generates aligns with your team's standards. Plus, its token economy approach ensures the AI focuses on the most relevant context, keeping things efficient while maintaining a broad understanding of your project.

Task Optimization and Automated Development

With its comprehensive context awareness, CodeRide takes development efficiency to the next level through automated task optimization. The Model Context Protocol (MCP) transforms brief task descriptions into detailed, step-by-step plans tailored for the AI.

For instance, if you request user authentication, the MCP might break it down into steps like updating the database schema, creating API endpoints, implementing middleware, and generating test cases - all while respecting your existing architecture.

CodeRide also integrates directly with your IDE, enabling autonomous development. The AI can make code changes, run tests, and even create pull requests. Routine tasks become effortless with workflow automation. For example, after adding a new API endpoint, CodeRide can save that workflow and reuse it for similar tasks in the future, cutting down on repetitive work and speeding up development timelines.

These features ensure seamless integration with your existing tools, making development smoother and more efficient.

Setting Up CodeRide

Getting started with CodeRide is simple. Connect your repository to the platform - currently in free beta - and gain immediate access to its full-project AI context.

Once connected, CodeRide analyzes your codebase, identifying key architectural patterns and building a detailed understanding of your project. This process only takes a few minutes and runs in the background without interrupting your workflow.

CodeRide integrates with tools like VS Code, Cursor, and GitHub Copilot through a lightweight extension, ensuring the AI always has the context it needs to assist you effectively.

You can also customize how the AI works through task optimization settings. Define your preferences for code organization, testing, and documentation, and the AI will follow these guidelines consistently. The MCP configuration further allows you to set up automated workflows for your most frequent tasks. By learning from your coding habits, CodeRide can suggest workflow templates tailored to your project type, giving you precise, context-aware support and boosting productivity like never before.

Conclusion: Getting the Most from AI in Software Development

Key Steps to Maximize AI Coding Efficiency

To make the most of AI in coding, start by ensuring your codebase is well-organized. This includes maintaining a clear structure, using consistent naming conventions, and providing concise documentation. A clean and accessible codebase gives AI the context it needs to work effectively.

Set up searchable indices using embeddings and context windows. This allows the AI to locate relevant functions, classes, and dependencies quickly. Automating context scoping is also essential to filter out irrelevant suggestions, saving time and improving accuracy.

Another crucial step is task structuring. Utilize reusable context files that outline your coding standards, architectural patterns, and any project-specific requirements. This ensures the AI produces results that align with your development goals. Additionally, test how well the AI retains your code style and handles cross-file dependencies to fine-tune its performance.

These practices lay the groundwork for tools like CodeRide, which takes AI-assisted development to the next level.

How CodeRide Transforms AI-Assisted Development

CodeRide builds on these strategies by automating much of the context analysis process. Instead of manually feeding the AI information about your project, CodeRide scans your entire project structure, dependencies, and coding patterns. This automation means you can skip the repetitive task of explaining your codebase and dive straight into building new features.

The platform’s Model Context Protocol turns basic tasks into detailed, actionable plans that align with your project’s architecture. Whether you’re creating new features or maintaining existing ones, the AI generates code that feels like a natural extension of your work.

What really sets CodeRide apart is its autonomous development capabilities. It can handle multi-step workflows, run tests, and even create pull requests, all with minimal intervention. Workflow automation adapts to your team’s habits, making it easy to set up templates for recurring tasks like API development or database updates.

Currently in free beta, CodeRide offers development teams a chance to experience full-project AI context without the hassle of setting up embeddings or managing context files. The platform takes care of the technical heavy lifting, allowing you to focus on building software with an AI assistant that truly understands your codebase.

FAQs

How can I help my AI coding assistant understand my project's architecture and dependencies?

To help your AI coding assistant get a solid grasp of your project's architecture and dependencies, start by sharing clear, comprehensive documentation. This should outline your system's structure, key components, and how everything works together. Adding architectural diagrams can be a game-changer, offering a visual snapshot of your project's design for better understanding.

Another useful step is leveraging dependency analysis tools. These tools map out the relationships within your codebase, highlighting intricate interdependencies. This added context - through documentation, diagrams, and dependency mapping - equips the AI to provide more accurate suggestions, streamline automation, and assist effectively with troubleshooting.

What are the best practices for creating metadata to help AI understand my codebase?

To help AI better comprehend your codebase, make sure to provide clear and consistent metadata. Stick to standardized formats and protocols, ensuring the metadata is easily readable by machines. Clearly outline your data structures, variables, and business logic so the AI can interpret and interact with your code more effectively.

In addition, include annotations and comments that explain the semantics of your code. These insights not only make it easier for AI to automate tasks but also enhance its ability to offer precise support, streamlining your development process. By focusing on consistency and clarity, you pave the way for smoother AI integration and improved performance with your coding tools.

How does CodeRide simplify providing full-project context to AI tools, and why is this important for developers?

CodeRide simplifies the way AI tools understand your codebase by seamlessly integrating with your project. Using advanced techniques like embeddings and context windows, it ensures that AI systems can instantly access all relevant code and documentation - no manual setup required.

For developers, this translates to spending less time managing context and more time doing what matters: coding. It sharpens the accuracy of AI-driven suggestions, streamlines task automation, and speeds up workflows, making the entire development process more efficient and productive.

Ready to transform your AI development workflow?

Experience seamless AI development with CodeRide. Eliminate context reset and build better software faster.

Share this article